Plotting Satellite Data

The first step is to find the satellite data. Normally, we would browse over to http://thredds.ucar.edu/thredds/ and find the top-level THREDDS Data Server (TDS) catalog. From there we can drill down to find satellite data products.

For current data, you could navigate to the Satellite Data directory, then GOES East Products and CloudAndMoistureImagery. There are subfolders for the CONUS, full disk, mesoscale sector images, and other products. In each of these is a folder for each channel of the ABI. In each channel there is a folder for every day in the approximately month-long rolling archive. As you can see, there are a massive amount of data coming down from GOES-16!

In the next section we'll be downloading the data in a pythonic way, so our first task is to build a URL that matches the URL we manually navigated to in the web browser. To make it as flexible as possible, we'll want to use variables for the sector name (CONUS, full-disk, mesoscale-1, etc.), the date, and the ABI channel number.

- Create variables named image_date, region, and channel. Assign them to today's date, the mesoscale-1 region, and ABI channel 8.

- Construct a string data_url from these variables and the URL we navigated to above.

- Verify that following your link will take you where you think it should.

- Change the extension from catalog.html to catalog.xml. What do you see?

from datetime import datetime

# Create variables for URL generation

# YOUR CODE GOES HERE

# Construct the data_url string

# YOUR CODE GOES HERE

# Print out your URL and verify it works!

# YOUR CODE GOES HERE

# %load solutions/data_url.py

# Cell content replaced by load magic replacement.

from datetime import datetime

# Create variables for URL generation

image_date = datetime.utcnow().date()

region = 'CONUS'

channel = 8

# We want to match something like:

# https://thredds-test.unidata.ucar.edu/thredds/catalog/satellite/goes16/GOES16/Mesoscale-1/Channel08/20181113/catalog.html

# Construct the data_url string

data_url = ('https://thredds.ucar.edu/thredds/catalog/satellite/goes/east/products/'

f'CloudAndMoistureImagery/{region}/Channel{channel:02d}/'

f'{image_date:%Y%m%d}/catalog.xml')

# Print out your URL and verify it works!

print(data_url)

Accessing data with Siphon¶

We could download the files to our computers from the THREDDS web interface, but that can become tedious for downloading many files, requires us to store them on our computer, and does not lend itself to automation.

We can use Siphon to parse the catalog from the TDS. This provides us a nice programmatic way of accessing the data. We start by importing the TDSCatalog class from siphon and giving it the URL to the catalog we just surfed to manually. Note: Instead of giving it the link to the HTML catalog, we change the extension to XML, which asks the TDS for the XML version of the catalog. This is much better to work with in code. If you forget, the extension will be changed for you with a warning being issued from siphon.

We want to create a TDSCatalog object called cat that we can examine and use to get handles to work with the data.

from siphon.catalog import TDSCatalog

cat = TDSCatalog(data_url)

To find the latest file, we can look at the cat.datasets attribute. Let’s look at the first five datasets:

cat.datasets[:5]

cat.datasets[0]

We'll get the next to most recent dataset (sometimes the most recent will not have received all tiles yet) and store it as variable dataset. Note that we haven't actually downloaded or transferred any real data yet, just bits of metadata have been received from THREDDS and parsed by siphon.

dataset = cat.datasets[1]

print(dataset)

We're finally ready to get the actual data. We could download the file, then open that, but there is no need! We can use siphon to help us only get what we need and hold it in memory. Notice that we're using the XArray accessor which will make life much nicer that dealing with the raw netCDF (like we used to back in the days of early 2018).

ds = dataset.remote_access(use_xarray=True)

Digging into and parsing the data¶

Now that we've got some data - let's see what we actually got our hands on.

ds

Great, so we have an XArray Dataset object, something we've dealt with before! We also see that we have the coordinates time, y, and x as well as the data variables of Sectorized_CMI and the projection information. Using the MetPy accessor for xarray, we can pull out the data we need for plotting.

import metpy

dat = ds.metpy.parse_cf('Sectorized_CMI')

proj = dat.metpy.cartopy_crs

x = dat['x']

y = dat['y']

Plotting the Data¶

To plot our data we'll be using CartoPy and matplotlib:

import cartopy.crs as ccrs

import cartopy.feature as cfeature

import matplotlib.pyplot as plt

%matplotlib inline

Let's first make a simple plot using CartoPy's built-in shapefiles.

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(1, 1, 1, projection=proj)

ax.add_feature(cfeature.COASTLINE.with_scale('50m'), linewidth=2)

ax.add_feature(cfeature.STATES.with_scale('50m'), linestyle=':', edgecolor='black')

ax.add_feature(cfeature.BORDERS.with_scale('50m'), linewidth=2, edgecolor='black')

im = ax.imshow(dat, extent=(x.min(), x.max(), y.min(), y.max()), origin='upper')

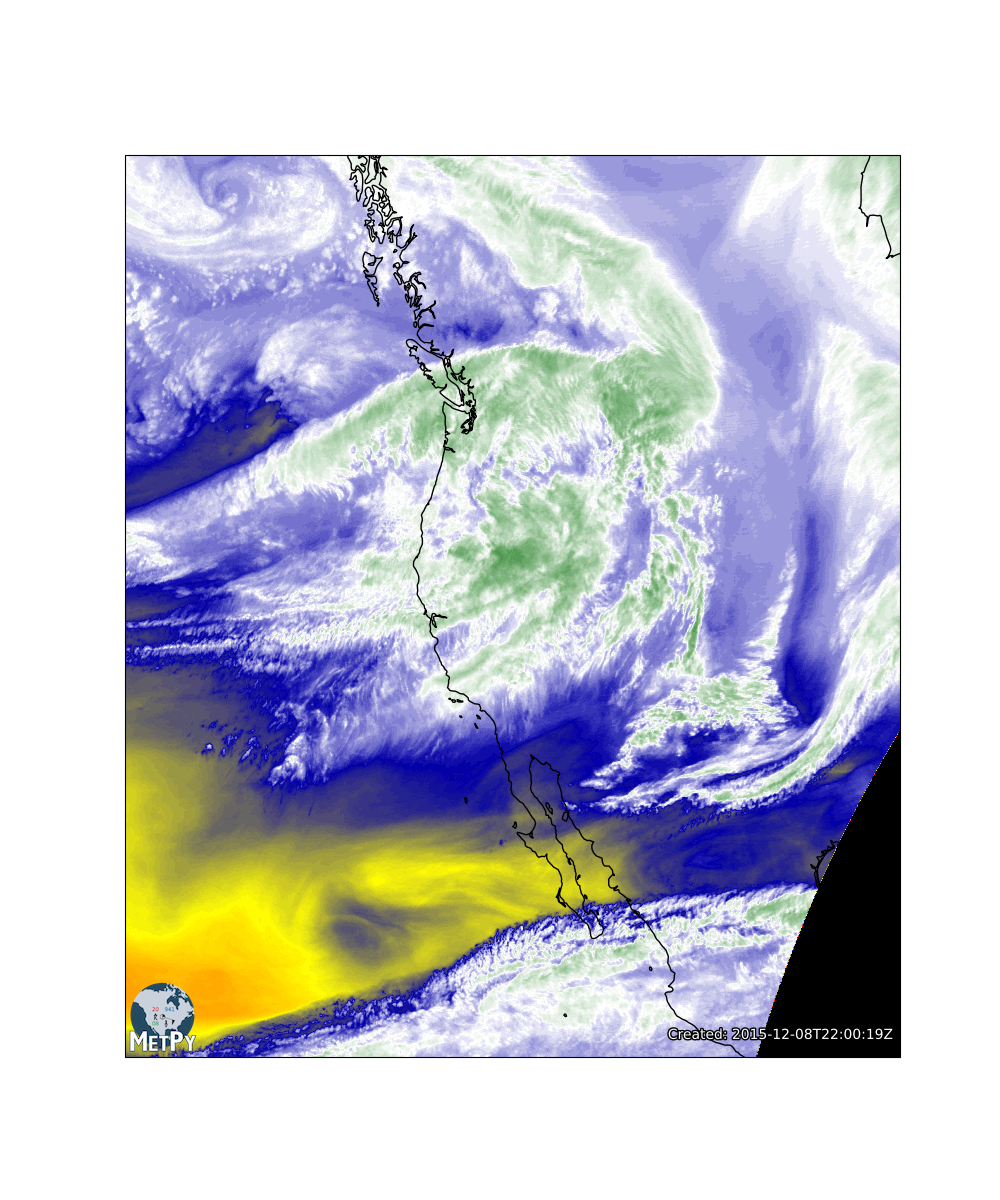

- Look at the documentation for metpy.plots.colortables here and figure out how to set the colormap of the image. For this image, let's go with the WVCIMSS_r colormap as this is a mid-level water vapor image. To see all of the colortables that MetPy supports, check out this page

- BONUS: Use the MetPy add_timestamp method from metpy.plots to add a timestamp to the plot.

- DAILY DOUBLE: Using the start_date_time attribute on the dataset ds, change the call to add_timestamp to use that date and time and the pretext to say "GOES 16 Channel X".

#Import for colortables

from metpy.plots import colortables

# Import for the bonus exercise

from metpy.plots import add_timestamp

# Make the image plot

# YOUR CODE GOES HERE

# %load solutions/sat_map.py

# Cell content replaced by load magic replacement.

#Import for colortables

from metpy.plots import colortables

# Import for the bonus exercise

from metpy.plots import add_timestamp

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(1, 1, 1, projection=proj)

ax.add_feature(cfeature.COASTLINE.with_scale('50m'), linewidth=2)

ax.add_feature(cfeature.STATES.with_scale('50m'), linestyle=':', edgecolor='black')

ax.add_feature(cfeature.BORDERS.with_scale('50m'), linewidth=2, edgecolor='black')

im = ax.imshow(dat, extent=(x.min(), x.max(), y.min(), y.max()), origin='upper')

wv_cmap = colortables.get_colortable('WVCIMSS_r')

im.set_cmap(wv_cmap)

#Bonus

start_time = datetime.strptime(ds.start_date_time, '%Y%j%H%M%S')

add_timestamp(ax, time=start_time, pretext=f'GOES-16 Ch. {channel} ',

high_contrast=True, fontsize=16, y=0.01)

plt.show()

More about Colortables¶

Colormapping in matplotlib (which backs CartoPy) is handled through two pieces:

- The norm (normalization) controls how data values are converted to floating point values in the range [0, 1]

- The colormap controls how values are converted from floating point values in the range [0, 1] to colors (think colortable)

Let's try to determine a good range of values for the normalization. We'll make a histogram to see the distribution of values in the data, then clip that range down to enhance contrast in the data visualization.

We use compressed to remove any masked elements before making our histogram (areas of space that are in the view of the satellite).

import matplotlib.pyplot as plt

plt.hist(ds['Sectorized_CMI'].to_masked_array().compressed(), bins=255);

In meteorology, we have many ‘standard’ colortables that have been used for certain types of data. We have included these in Metpy in the metpy.plots.ctables module. The WVCIMSS colormap is a direct conversion of a GEMPAK colormap. Let's remake that image with a range of 195 to 265 K. This was empirically determined to closely match other displays of water vapor data.

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(1, 1, 1, projection=proj)

ax.add_feature(cfeature.COASTLINE.with_scale('50m'), linewidth=2)

ax.add_feature(cfeature.STATES.with_scale('50m'), linestyle=':', edgecolor='black')

ax.add_feature(cfeature.BORDERS.with_scale('50m'), linewidth=2, edgecolor='black')

im = ax.imshow(dat, extent=(x.min(), x.max(), y.min(), y.max()), origin='upper')

wv_norm, wv_cmap = colortables.get_with_range('WVCIMSS_r', 195, 265)

im.set_cmap(wv_cmap)

im.set_norm(wv_norm)

start_time = datetime.strptime(ds.start_date_time, '%Y%j%H%M%S')

add_timestamp(ax, time=start_time, pretext=f'GOES-16 Ch. {channel} ',

high_contrast=True, fontsize=16, y=0.01)

plt.show()